"Racist" Computer Algorithms Predicts that Blacks are More Likely to Commit Crime

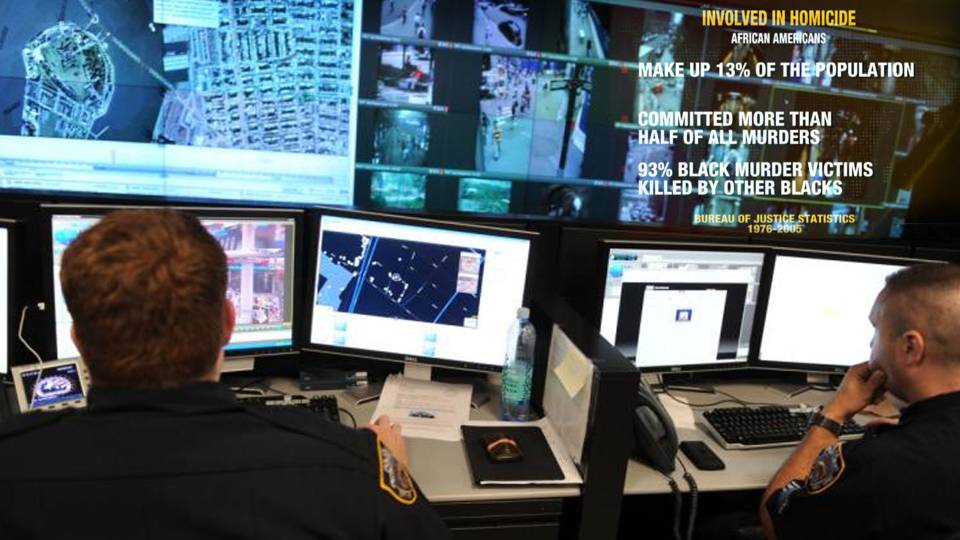

Washington Post & SJWs says that computerized predictive policing is "racist" and biased after the algorithm finds that blacks are more likely to commit crime, depite existing statistics (see below).

Big data has expanded to the criminal justice system. In Los Angeles, police use computerized “predictive policing” to anticipate crimes and allocate officers. In Fort Lauderdale, Fla., machine-learning algorithms are used to set bond amounts. In states across the country, data-driven estimates of the risk of recidivism are being used to set jail sentences.

Advocates say these data-driven tools remove human bias from the system, making it more fair as well as more effective. But even as they have become widespread, we have little information about exactly how they work. Few of the organizations producing them have released the data and algorithms they use to determine risk.

We need to know more, because it’s clear that such systems face a fundamental problem: The data they rely on are collected by a criminal justice system in which race makes a big difference in the probability of arrest — even for people who behave identically. Inputs derived from biased policing will inevitably make black and Latino defendants look riskier than white defendants to a computer. As a result, data-driven decision-making risks exacerbating, rather than eliminating, racial bias in criminal justice.

Consider a judge tasked with making a decision about bail for two defendants, one black and one white. Our two defendants have behaved in exactly the same way prior to their arrest: They used drugs in the same amount, have committed the same traffic offenses, owned similar homes and took their two children to the same school every morning. But the criminal justice algorithms do not rely on all of a defendant’s prior actions to reach a bail assessment — just those actions for which he or she has been previously arrested and convicted. Because of racial biases in arrest and conviction rates, the black defendant is more likely to have a prior conviction than the white one, despite identical conduct. A risk assessment relying on racially compromised criminal-history data will unfairly rate the black defendant as riskier than the white defendant.

To make matters worse, risk-assessment tools typically evaluate their success in predicting a defendant’s dangerousness on rearrests — not on defendants’ overall behavior after release. If our two defendants return to the same neighborhood and continue their identical lives, the black defendant is more likely to be arrested. Thus, the tool will falsely appear to predict dangerousness effectively, because the entire process is circular: Racial disparities in arrests bias both the predictions and the justification for those predictions.

We know that a black person and a white person are not equally likely to be stopped by police: Evidence on New York’s stop-and-frisk policy, investigatory stops, vehicle searches and drug arrests show that black and Latino civilians are more likely to be stopped, searched and arrested than whites. In 2012, a white attorney spent days trying to get himself arrested in Brooklyn for carrying graffiti stencils and spray paint, a Class B misdemeanor. Even when police saw him tagging the City Hall gateposts, they sped past him, ignoring a crime for which 3,598 people were arrested by the New York Police Department the following year.

Before adopting risk-assessment tools in the judicial decision-making process, jurisdictions should demand that any tool being implemented undergo a thorough and independent peer-review process. We need more transparency and better data to learn whether these risk assessments have disparate impacts on defendants of different races. Foundations and organizations developing risk-assessment tools should be willing to release the data used to build these tools to researchers to evaluate their techniques for internal racial bias and problems of statistical interpretation. Even better, with multiple sources of data, researchers could identify biases in data generated by the criminal justice system before the data is used to make decisions about liberty. Unfortunately, producers of risk-assessment tools — even nonprofit organizations — have not voluntarily released anonymized data and computational details to other researchers, as is now standard in quantitative social science research.

For these tools to make racially unbiased predictions, they must use racially unbiased data. We cannot trust the current risk-assessment tools to make important decisions about our neighbors’ liberty unless we believe — contrary to social science research — that data on arrests offer an accurate and unbiased representation of behavior. Rather than telling us something new, these tools risk laundering bias: using biased history to predict a biased future.