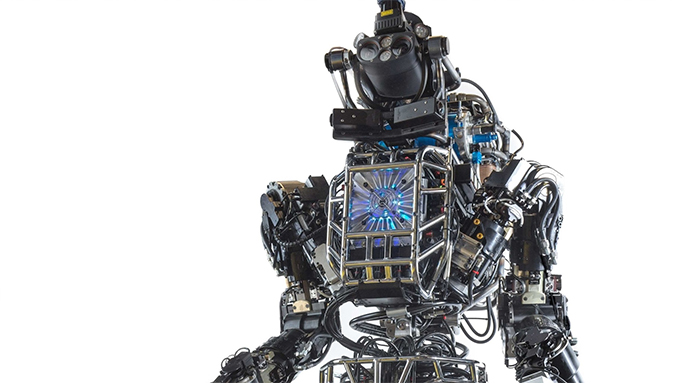

Should robots have human rights? Act now to regulate killer machines before they multiply and demand the right to vote, warns legal expert

Source: dailymail.co.uk

A legal expert has warned that the laws that govern robotics are playing catch-up to the technology and need to be updated in case robots 'wake up' and demand rights.

He also argues that artificial intelligence has come of age, and that we should begin tackling these problems before they arise, as robots increasingly blur the line between person and machine.

'Robotics combines, for the first time, the promiscuity of data with the capacity to do physical harm,' Ryan Calo, from the University of Washington’s School of Law, wrote in his paper on the subject.

'Robotic systems accomplish tasks in ways that cannot be anticipated in advance; and robots increasingly blur the line between person and instrument.'

There has been rising concern about the potential danger of artificial intelligence to humans, with prominent figures including Stephen Hawking and Elon Musk wading in on the debate.

In January both signed an open letter to AI researchers warning of the dangers of artificial intelligence.

The letter warns that without safeguards on the technology, mankind could be heading for a dark future, with millions out of work or even the demise of our species.

Legal expert Calo outlines a terrifying thought experiment detailing how our laws might need an update to deal with the challenges posed by robots demanding the right to vote.

'Imagine that an artificial intelligence announces it has achieved self-awareness, a claim no one seems able to discredit,' Calo wrote.

'Say the intelligence has also read Skinner v. Oklahoma, a Supreme Court case that characterizes the right to procreate as “one of the basic civil rights of man.”

'The machine claims the right to make copies of itself (the only way it knows to replicate). These copies believe they should count for purposes of representation in Congress and, eventually, they demand a pathway to suffrage.

'Of course, conferring such rights to beings capable of indefinitely self-copying would overwhelm our system of governance.

'Which right do we take away from this sentient entity, then, the fundamental right to copy, or the deep, democratic right to participate?'

In other developments, last week computer scientist Professor Stuart Russell said that artificial intelligence could be as dangerous as nuclear weapons.

In an interview with the journal Science for a special edition on Artificial Intelligence, he said: 'From the beginning, the primary interest in nuclear technology was the "inexhaustible supply of energy".

'The possibility of weapons was also obvious. I think there is a reasonable analogy between unlimited amounts of energy and unlimited amounts of intelligence.

'Both seem wonderful until one thinks of the possible risks. In neither case will anyone regulate the mathematics.

'The regulation of nuclear weapons deals with objects and materials, whereas with AI it will be a bewildering variety of software that we cannot yet describe.'

Source: dailymail.co.uk