Norway Joins the Race to Develop Killer Robots

Source: activistpost.com

Norway is a large exporter of weapons, which makes the resolution of the debate about creating killer robots an important issue for everyone.

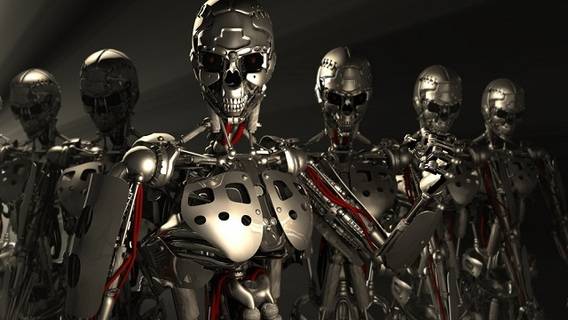

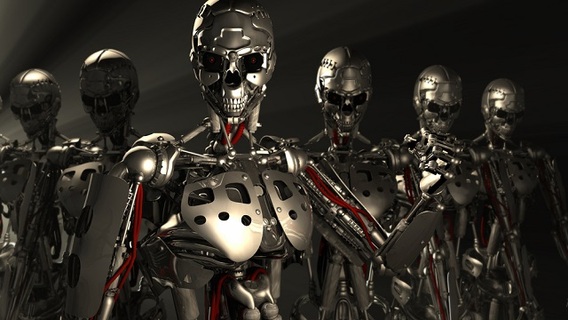

One could debate the overall merits or failings of robotic systems, but an area that clearly has become a point for concern on all sides is the emergence of "killer robots." According to robotics pioneer, David Hanson, we are on a collision course with exponential growth in computing and technology that might only give us a "few years" to counter this scenario.

Even mainstream tech luminaries like Elon Musk and Stephen Hawking have proclaimed the magnitude of the threat following a reading of Nick Bostrom’s book Superintelligence. Musk flatly stated that robots infused with advanced artificial intelligence are "potentially more dangerous than nukes."

Robot-controlled missiles are being developed in Norway, and could easily be a path toward that ultimate danger.

Universities such as Cambridge have said that "terminators" are one of the greatest threats to mankind according to their study program Centre for the Study of Existential Risk. Human rights groups and concerned citizens have echoed this with a Campaign to Stop Killer Robots. This campaign has even garnered support from one Canadian robot manufacturer who penned an open letter to his colleagues urging them not to delve into the dark side of robotics. Meanwhile, in the background, the United Nations and the U.S. military have been forced into the debate, but are currently stutter-stepping their way through the process.

As the clock ticks, there is a global drone and artificial intelligence arms race as nations seek to catch up to others who have taken the lead. Perhaps one sign of how fast and far this proliferation extends, seemingly peaceful and neutral Norway is making the news as a debate rages there about plans to have artificial intelligence take over missile systems on its fighter jets. In reality, Norway is actually a large exporter of weapons, which makes the resolution of this debate an important issue for everyone.

There is a general move to augment traditional weapons systems with artificial intelligence. A recent post from the U.S. Naval Institute News stated that "A.I is going to be huge" by 2030. A.I. is foreseen "as a decision aid to the pilot in a way similar in concept to how advanced sensor fusion onboard jets like the F-22 and Lockheed Martin F-35 work now."

This is the first step toward removing human decision making. According to Norwegian press, their government states this explicitly:

The partially autonomously controlled missiles, or so-called "killer robots", will be used for airborne strikes for its new fighter jets and have the ability to identify targets and make decisions to kill without human interference. (emphasis added)Interference?! What, like compassion and having a conscience? Make no mistake, at the highest levels that is exactly what it means. This was highlighted in a recent report that certainly has military elite across the planet concerned. It seems that the distance they have attempted to create by putting humans in cubicles thousands of miles from the actual battlefield still has not alleviated the emotional impact of killing. Apparently it’s not a video game after all.

Although drone operators may be far from the battlefield, they can still develop symptoms of post-traumatic stress disorder (PTSD), a new study shows.

About 1,000 United States Air Force drone operators took part in the study, and researchers found that 4.3 percent of them experienced moderate to severe PTSD.That percentage might seem low, but the consequences can be incredible. Listen to what one former drone operator has to say about his role in 1,600 killings from afar.

The military system sees this reaction as a defect.

"I would say that, even though the percentage is small, it is still a very important number, and something that we would want to take seriously so that we make sure that the folks that are performing their job are effectively screened for this condition and get the help that they [may] need," said study author Wayne Chappelle, a clinical psychologist who consults for the USAF School of Aerospace Medicine at Wright Patterson Air Force Base in Dayton, Ohio.Consequently, the U.S. military has been pursuing "moral robots" that can supposedly discern right from wrong, probably to alleviate the growing pushback by those wondering what happens - legally and ethically - to a society that transfers responsibility from humans to machines. Some research is indicating that robotics/A.I. is not yet up to even the most basic ethical tasks, yet its role in weapons systems continues.

[...]

Source: Activist Post