Twitter surveys users on their ethnicity when it warns them for wrongthink

Combating so-called “hate speech” seems to have become a huge priority for social media platforms these days. In an Orwellian fashion, Twitter has started testing a feature that will notify users when they use any language that Twitter thinks they should think twice on.

“When things get heated, you may say things you don’t mean. To let you rethink a reply, we’re running a limited experiment on iOS with a prompt that gives you the option to revise your reply before it’s published if it uses language that could be harmful,” said Twitter Support back when they announced the new feature.

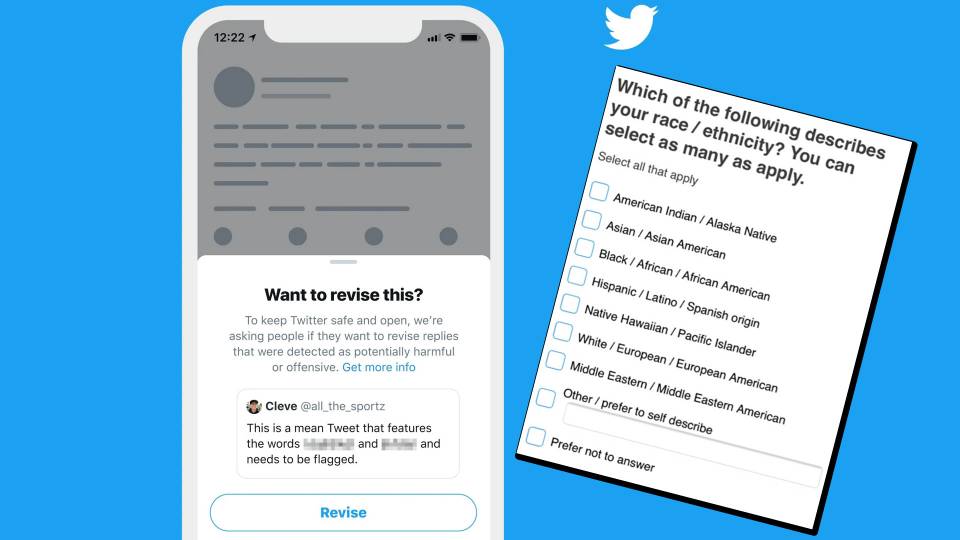

Here’s how this feature works: Whenever users type a reply and hit the send button, Twitter will quickly analyse the reply and look for phrases or words that were frequently reported in the past and would then generate a notification accordingly. If a reply does contain language that is considered “harmful” to the company, users would be asked to reconsider their tweet reply.

While Twitter subtly refers to this as asking users to “rethink” their replies, it seems more like an effort to heavily moderate speech across the platform. With such practices in place, it isn’t an exaggeration if people would become even more closeted when it comes to expressing their views online.

It’s been a few months since users started getting this dubious notification when they post a tweet that Twitter wants to correct their thinking on and Twitter has now annoyed users further by surveying them on their ethnicity when they make a statement that Twitter finds problematic.

Twitter user @BadCrippIe shared what Twitter asked her when she tried to post a tweet Twitter objected to.

For a long time now, Twitter has been pressured to address “hate speech.” A number of users as well as Twitter’s algorithm regularly flag posts across the platform. Having said that, it is worth noting that what’s deemed offensive by some may not be deemed offensive by all. What’s more, even technology can sometimes prematurely flag a reply as hate speech due to the lack of the ability to comprehend subtle nuances in human speech.

Sunita Saligram, the global head of site policy for trust and safety at Twitter, said that the new rules were implemented to prevent people from being hateful on the platform but to not control repeat offenders from spreading hate speech.

While some of Twitter’s more sensitive users will think this is a foot in the right direction, others feel it’s too intrusive and becoming rather sinister.